Beginner’s Guide to Generative AI

[ad_1]

You’ve almost certainly heard of generative AI. This subset of machine learning has become one of the most-used buzzwords in tech circles – and beyond.

Generative AI is everywhere right now. But what exactly is it? How does it work? How can we use it to make our lives (and jobs) easier?

As we enter a new era of artificial intelligence, generative AI is only going to become more and more common. If you need an explainer to cover all the basics, you’re in the right place. Read on to learn all about generative AI, from its humble beginnings in the 1960s to today – and its future, including all the questions about what may come next.

What is Generative AI?

Generative AI algorithms use large datasets to create foundation models, which then serve as a base for generative AI systems that can perform different tasks. One of the most powerful capabilities generative AI has is the ability to self-supervise its learning as it identifies patterns that will allow it to generate different kinds of output.

Why is Everyone Talking About Generative AI Right Now?

Generative AI has seen significant advancements in recent times. You’ve probably already used ChatGPT, one of the major players in the field and the fastest AI product to obtain 100 million users. Several other dominant and emerging AI tools have people talking: DALL-E, Bard, Jasper, and more.

Major tech companies are in a race against startups to harness the power of AI applications, whether it’s rewriting the rules of search, reaching significant market caps, or innovating in other areas. The competition is fierce, and these companies are putting in a lot of work to stay ahead.

The History of Generative AI

Generative AI’s history goes back to the 1960s when we saw early models like the ELIZA chatbot. ELIZA simulated conversation with users, creating seemingly original responses. However, these responses were actually based on a rules-based lookup table, limiting the chatbot’s capabilities.

A major leap in the development of generative AI came in 2014, with the introduction of Generative Adversarial Networks (GANs) by Ian Goodfellow, a researcher at Google. GANs are a type of neural network architecture that uses two networks, a generator, and a discriminator.

The generator creates new content, while the discriminator evaluates that content against a dataset of real-world examples. Through this process of generation and evaluation, the generator can learn to create increasingly realistic content.

Network

A network is a group of computers that share resources and communication protocols. These networks can be configured as wired, optical, or wireless connections. In web hosting, server networks store and share data between the hosting customer, provider, and end-user.

Read More

In 2017, another significant breakthrough came when a group at Google released the famous Transformers paper, “Attention Is All You Need.” In this case, “attention” refers to mechanisms that provide context based on the position of words in a text, which can vary from language to language. The researchers proposed focusing on these attention mechanisms and discarding other means of gleaning patterns from text. Transformers represented a shift from processing a string of text word by word to analyzing an entire string all at once, making much larger models viable.

The implications of the Transformers architecture were significant both in terms of performance and training efficiency.

The Generative Pre-trained Transformers, or GPTs, that were developed based on this architecture now power various AI technologies like ChatGPT, GitHub Copilot, and Google Bard. These models were trained on incredibly large collections of human language and are known as Large Language Models (LLMs).

What’s the Difference Between AI, Machine Learning, and Generative AI?

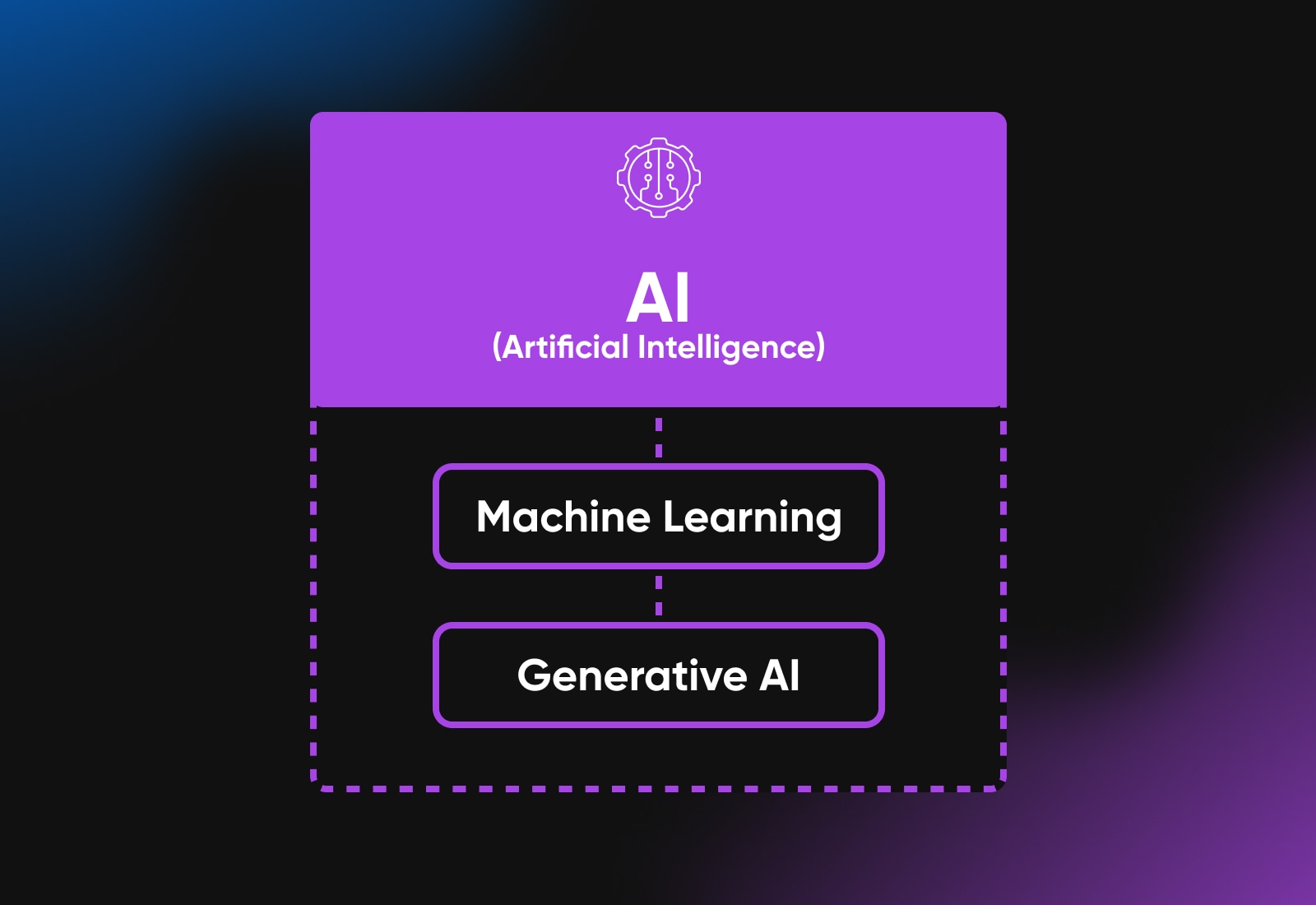

Generative AI, AI (Artificial Intelligence), and Machine Learning all belong to the same broad field of study, but each represents a different concept or level of specificity.

AI is the broadest term among the three. It refers to the concept of creating machines or software that can mimic human intelligence, perform tasks traditionally requiring human intellect, and improve their performance based on experience. AI encompasses a variety of subfields, including natural language processing (NLP), computer vision, robotics, and machine learning.

Machine Learning (ML) is a subset of AI and represents a specific approach to achieving AI. ML involves creating and using algorithms that allow computers to learn from data and make predictions or decisions, rather than being explicitly programmed to carry out a specific task. Machine learning models improve their performance as they are exposed to more data over time.

Generative AI is a subset of machine learning. It refers to models that can generate new content (or data) similar to the data they trained on. In other words, these models don’t just learn from data to make predictions or decisions – they create new, original outputs.

How does Generative AI Work?

Just like a painter might create a new painting or a musician might write a new song, generative AI creates new things based on patterns it has learned.

Think about how you might learn to draw a cat. You might start by looking at a lot of pictures of cats. Over time, you start to understand what makes a cat a cat: the shape of the body, the pointy ears, the whiskers, and so on. Then, when you’re asked to draw a cat from memory, you use these patterns you’ve learned to create a new picture of a cat. It won’t be a perfect copy of any one cat you’ve seen, but a new creation based on the general idea of “cat”.

Generative AI works similarly. It starts by learning from a lot of examples. These could be images, text, music, or other data. The AI analyzes these examples and learns about the patterns and structures that appear in them. Once it has learned enough, it can start to generate new examples that are similar to what it has seen before.

For instance, a generative AI model trained on lots of images of cats could generate a new image that looks like a cat. Or, a model trained on lots of text descriptions could write a new paragraph about a cat that sounds like a human wrote it. The generated content isn’t exact copies of what the AI has seen before but new pieces that fit the patterns it has learned.

The important point to understand is that the AI is not just copying what it has seen before but creating something new based on the patterns it has learned. That’s why it’s called “generative” AI.

Get Content Delivered Straight to Your Inbox

Subscribe to our blog and receive great content just like this delivered straight to your inbox.

How is Generative AI Governed?

The short answer is that it’s not, which is another reason so many people are talking about AI right now.

AI is becoming increasingly powerful, but some experts are worried about the lack of regulation and governance over its capabilities. Leaders from Google, OpenAI, and Anthropic have all warned that generative AI could easily be used for wide-scale harm rather than good without regulation and an established ethics system.

Generative AI Models

For the generative AI tools that many people commonly use today, there are two main models: text-based and multimodal.

Text Models

A generative AI text model is a type of AI model that is capable of generating new text based on the data it’s trained on. These models learn patterns and structures from large amounts of text data and then generate new, original text that follows these learned patterns.

The exact way these models generate text can vary. Some models may use statistical methods to predict the likelihood of a particular word following a given sequence of words. Others, particularly those based on deep learning techniques, may use more complex processes that consider the context of a sentence or paragraph, semantic meaning, and even stylistic elements.

Generative AI text models are used in various applications, including chatbots, automatic text completion, text translation, creative writing, and more. Their goal is often to produce text that is indistinguishable from that written by a human.

Multimodal Models

A generative AI multimodal model is a type of AI model that can handle and generate multiple types of data, such as text, images, audio, and more. The term “multimodal” refers to the ability of these models to understand and generate different types of data (or modalities) together.

Multimodal models are designed to capture the correlations between different modes of data. For example, in a dataset that includes images and corresponding descriptions, a multimodal model could learn the relationship between the visual content and its textual description.

One use of multimodal models is in generating text descriptions for images (also known as image captioning). They can also be used to generate images from text descriptions (text-to-image synthesis). Other applications include speech-to-text and text-to-speech transformations, where the model generates audio from text and vice versa.

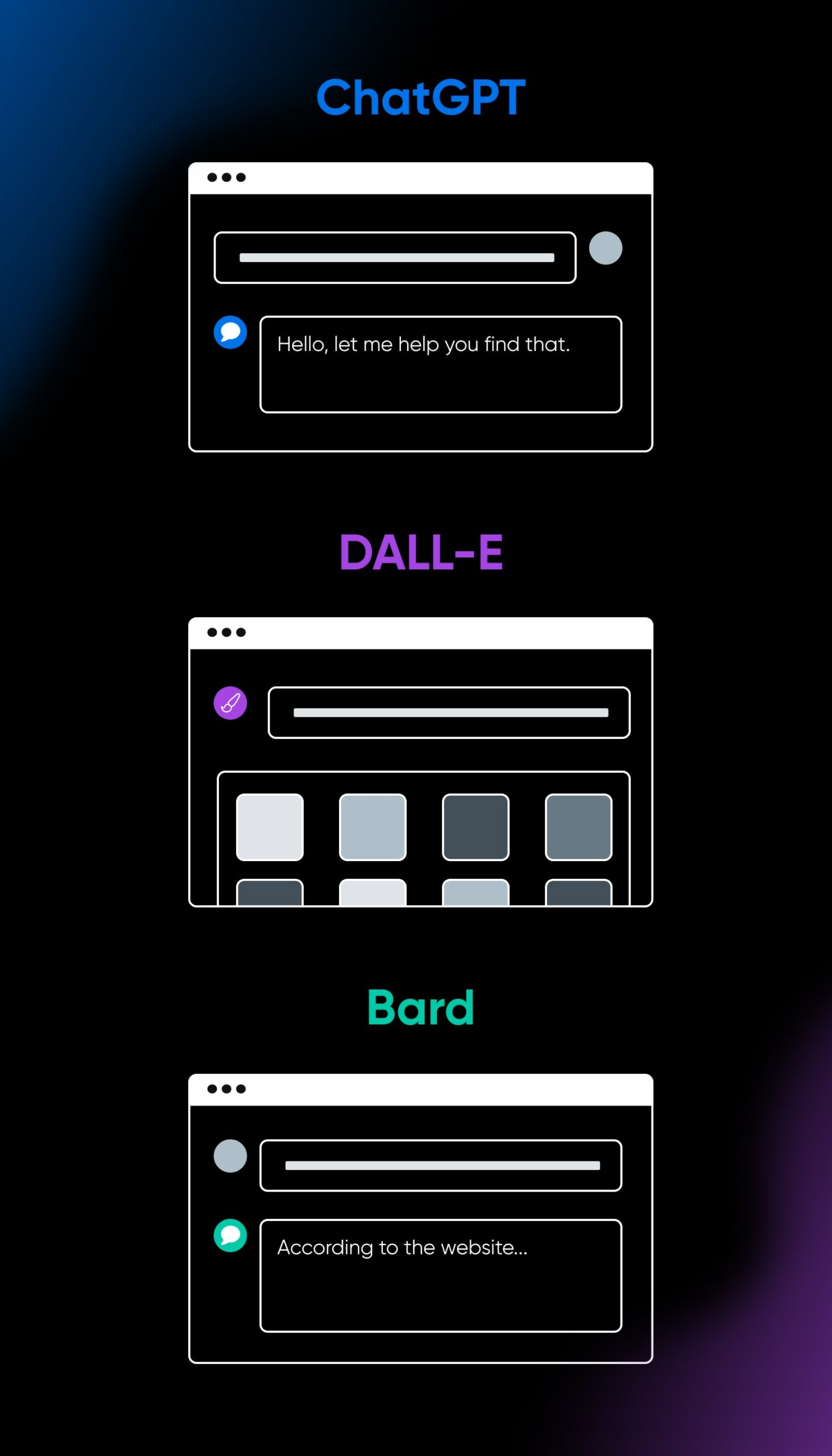

What are DALL-E, ChatGPT, and Bard?

DALL-E, ChatGPT, and Bard are three of the most common, most-used, and most powerful generative AI tools available to the general public.

ChatGPT

ChatGPT is a language model developed by OpenAI. It is based on the GPT (Generative Pre-trained Transformer) architecture, one of the most advanced transformers available today. ChatGPT is designed to engage in conversational interactions with users, providing human-like responses to various prompts and questions. OpenAI’s first public release was GPT-3. Nowadays, GPT-3.5 and GPT-4 are available to some users. ChatGPT was originally only accessible via an API but now can be used in a web browser or mobile app, making it one of the most accessible and popular generative AI tools today.

DALL-E

DALL-E is an AI model designed to generate original images from textual descriptions. Unlike traditional image generation models that manipulate existing images, DALL-E creates images entirely from scratch based on textual prompts. The model is trained on a massive dataset of text-image pairs, using a combination of unsupervised and supervised learning techniques.

Bard

Bard is Google’s entry into the AI chatbot market. Google was an early pioneer in AI language processing, offering open-source research for others to build upon. Bard is built on Google’s most advanced LLM, PaLM2, which allows it to quickly generate multimodal content, including real-time images.

15 Generative AI Tools You Can Try Right Now

While ChatGPT, DALL-E, and Bard are some of the biggest players in the field of generative AI, there are many other tools you can try (note that some of these tools require paid memberships or have waiting lists):

- Text generation tools: Jasper, Writer, Lex

- Image generation tools: Midjourney, Stable Diffusion, DALL-E

- Music generation tools: Amper, Dadabots, MuseNet

- Code generation tools: Codex, GitHub Copilot, Tabnine

- Voice generation tools: Descript, Listnr, Podcast.ai

What is Generative AI used for?

Generative AI already has countless use cases across many different industries, with new ones constantly emerging.

Here are some of the most common (yet still exciting!) ways generative AI is used:

- In the finance industry to watch transactions and compare them to people’s usual spending habits to detect fraud faster and more reliably.

- In the legal industry to design and interpret contracts and other legal documents or to analyze evidence (but not to cite case law, as one lawyer learned the hard way).

- In the manufacturing industry to run quality control on manufactured items and automate the process of finding defective pieces or parts.

- In the media industry to generate content more economically, help translate it into new languages, dub video and audio content in actors’ synthesized voices, and more.

- In the healthcare industry by creating decision trees for diagnostics and quickly identifying suitable candidates for research and trials.

There are many other creative and unique ways people have found to apply generative AI to their jobs and fields, and more are discovered all the time. What we’re seeing is certainly just the tip of the iceberg of what AI can do in different settings.

What are the Benefits of Generative AI?

Generative AI has many benefits, both potential and realized. Here are some ways it can benefit how we work and create.

Better Efficiency and Productivity

Generative AI can automate tasks and workflows that would otherwise be time-consuming or tedious for humans, such as content creation or data generation. This can increase efficiency and productivity in many contexts, optimizing how we work and freeing up human time for more complex, creative, or strategic tasks.

Increased Scalability

Generative AI models can generate outputs at a scale that would be impossible for humans alone. For example, in customer service, AI chatbots can handle a far greater volume of inquiries than human operators, providing 24/7 support without the need for breaks or sleep.

Enhanced Creativity and Innovation

Generative AI can generate new ideas, designs, and solutions that humans may not think of. This can be especially valuable in fields like product design, data science, scientific research, and art, where fresh perspectives and novel ideas are highly valued.

Improved Decision-Making and Problem-Solving

Generative AI can aid decision-making processes by generating a range of potential solutions or scenarios. This can help decision-makers consider a broader range of options and make more informed choices.

Accessibility

By generating content, generative AI can help make information and experiences more accessible. For example, AI could generate text descriptions of images for visually impaired users or help translate content into different languages to reach a broader audience.

What are the Limitations of Generative AI?

While generative AI has many benefits, it also has limitations. Some are related to the technology itself and the shortcomings it has yet to overcome, and some are more existential and will impact generative AI as it continues to evolve.

Quality of Generated Content

While generative AI has made impressive strides, the quality of the content it generates can still vary. At times, outputs may not make sense — They may lack coherence or be factually incorrect. This is especially the case for more complex or nuanced tasks.

Overdependence on Training Data

Generative AI models can sometimes overfit to their training data, meaning they learn to mimic their training examples very closely but struggle to generalize to new, unseen data. They can also be hindered by the quality and bias of their training data, resulting in similarly biased or poor-quality outputs (more on that below).

Limited Creativity

While generative AI can produce novel combinations of existing ideas, its ability to truly innovate or create something entirely new is limited. It operates based on patterns it has learned, and it lacks the human capacity for spontaneous creativity or intuition.

Computational Resources

Training generative AI models often requires substantial computational resources. Usually, you’ll need to use high-performance GPUs (Graphics Processing Units) capable of performing the parallel processing required by machine learning algorithms. GPUs are expensive to purchase outright and also require significant energy.

A 2019 paper from the University of Massachusetts, Amherst, estimated that training a large AI model could generate as much carbon dioxide as five cars over their entire lifetimes. This brings into question the environmental impact of building and using generative AI models and the need for more sustainable practices as AI continues to advance.

What’s the Controversy Surrounding Generative AI?

Beyond the limitations, there are also some serious concerns around generative AI, especially as it grows rapidly with little to no regulation or oversight.

Ethical Concerns

Ethically, there are concerns about the misuse of generative AI for creating misinformation or generating content that promotes harmful ideologies. AI models can be used to impersonate individuals or entities, generating text or media that appears to originate from them, potentially leading to misinformation or identity misuse. AI models may also generate harmful or offensive content, either intentionally due to malicious use or unintentionally due to biases in their training data.

Many leading experts in the field are calling for regulations (or at least ethical guidelines) to promote responsible AI use, but they have yet to gain much traction, even as AI tools have begun to take root.

Bias in Training Data

Bias in generative AI is another significant issue. Since AI models learn from the data they are trained on, they may reproduce and amplify existing biases in that data. This can lead to unfair or discriminatory outputs, perpetuating harmful stereotypes or disadvantaging certain groups.

Questions About Copyright and Intellectual Property

Legally, the use of generative AI introduces complex questions about copyright and intellectual property. For example, if a generative AI creates a piece of music or art that closely resembles an existing work, it’s unclear who owns the rights to the AI-generated piece and whether its creation constitutes copyright infringement. Additionally, if an AI model generates content based on copyrighted material included in its training data, it could potentially infringe on the original creators’ rights.

In the context of multimodal AI creation based on existing art, the copyright implications are still uncertain. If the AI’s output is sufficiently original and transformative, it may be considered a new work. However, if it closely mimics existing art, it could potentially infringe on the original artist’s copyright. Whether the original artist should be compensated for such AI-generated works is a complex question that intersects with legal, ethical, and economic considerations.

Generative AI FAQ

Below are some of the most frequently asked questions about generative AI to help you round out your knowledge of the subject.

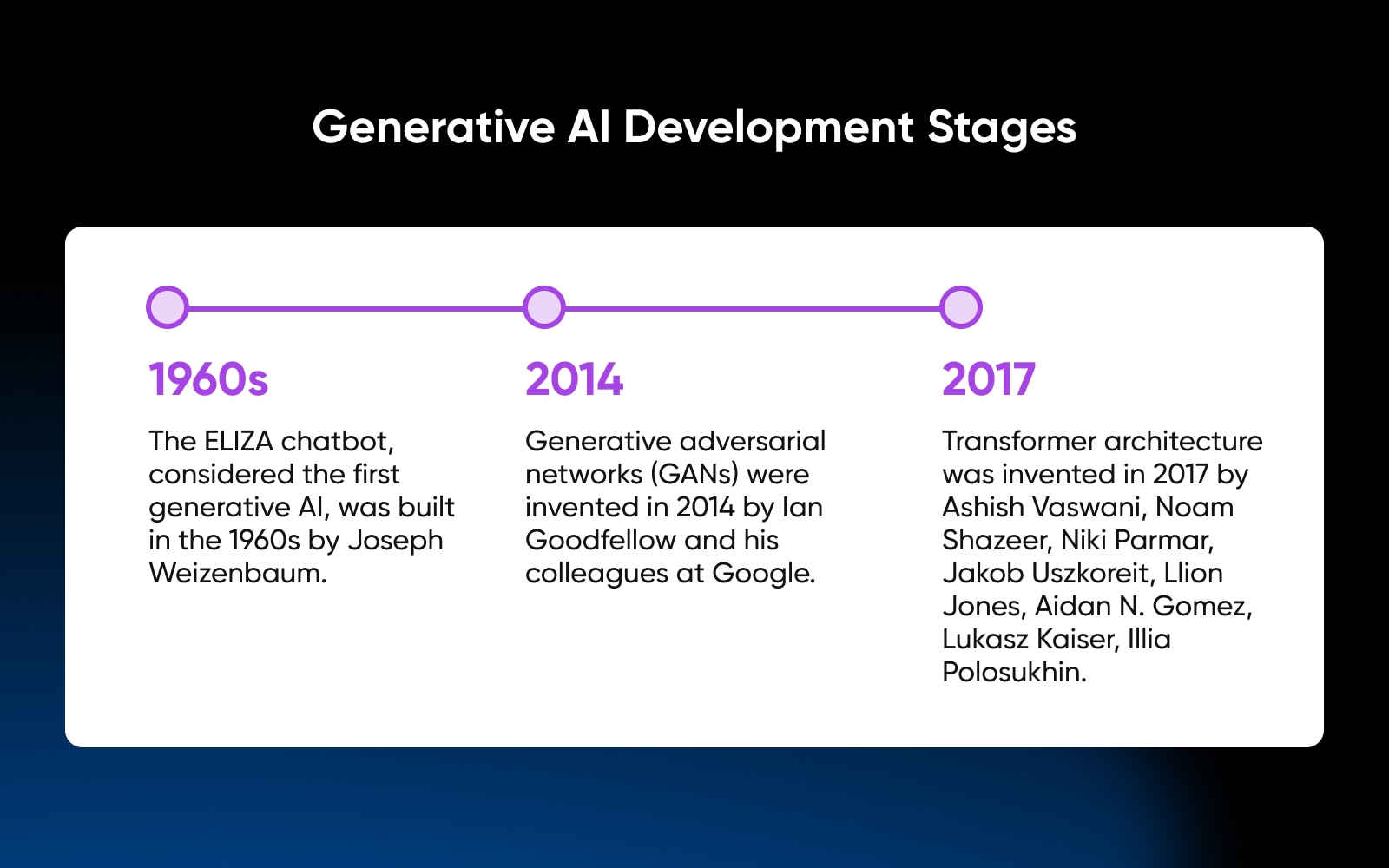

Who Invented Generative AI?

Generative AI wasn’t invented by a single person. It has been developed in different stages, with contributions from numerous researchers and coders over time.

The ELIZA chatbot, considered the first generative AI, was built in the 1960s by Joseph Weizenbaum.

Generative adversarial networks (GANs) were invented in 2014 by Ian Goodfellow and his colleagues at Google.

Transformer architecture was invented in 2017 by Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, Illia Polosukhin.

Many more scientists, researchers, tech workers, and more are continuing the work to advance generative AI in the years to come.

What Does it Take to Build a Generative AI Model?

Building a generative AI model requires the following:

Building a generative AI model can be a complex and resource-intensive process, often requiring a team of skilled data scientists and engineers. Luckily, many tools and resources are available to make this process more accessible, including open-source research on generative AI models that have already been built.

How do you Train a Generative AI Model?

Training a generative AI model involves a lot of steps – and a lot of time.

What kinds of Output can Generative AI Create?

Generative AI can create a wide variety of outputs, including text, images, video, motion graphics, audio, 3-D models, data samples, and more.

Is Generative AI Really Taking People’s Jobs?

Kind of. This is a complex issue with many factors at play: the rate of technological advancement, the adaptability of different industries and workforces, economic policies, and more.

AI has the potential to automate repetitive, routine tasks, and generative AI can already perform some tasks as well as a human can (but not writing articles – a human wrote this

It’s important to remember that generative AI, like the AI before it, has the potential to create new jobs as well. For example, generative AI might automate some tasks in content creation, design, or programming, potentially reducing the need for human labor in these areas, but it’s also enabling new technologies, services, and industries that didn’t exist before.

And while generative AI can automate certain tasks, it doesn’t replicate human creativity, critical thinking, and decision-making abilities, which are crucial in many jobs. That’s why it’s more likely that generative AI will change the nature of work rather than completely replace humans.

Will AI ever Become Sentient?

This is another tough question to answer. The consensus among AI researchers is that AI, including generative AI, has yet to achieve sentience, and it’s uncertain when or even if it ever will. Sentience refers to the capacity to have subjective experiences or feelings, self-awareness, or a consciousness, and it currently distinguishes humans and other animals from machines.

While AI has made impressive strides and can mimic certain aspects of human intelligence, it does not “understand” in the way humans do. For example, a generative AI model like GPT-3 can generate text that seems remarkably human-like, but it doesn’t actually understand the content it’s generating. It’s essentially finding patterns in data and predicting the next piece of text based on those patterns.

Even if we get to a point where AI can mimic human behavior or intelligence so well that it appears sentient, that wouldn’t necessarily mean it truly is sentient. The question of what constitutes sentience and how we could definitively determine whether an AI is sentient are complex philosophical and scientific questions that are far from being answered.

The Future of Generative AI

No one can predict the future – not even generative AI (yet).

The future of generative AI is poised to be exciting and transformative. AI’s capabilities will likely continue to expand and evolve, driven by advancements in underlying technologies, increasing data availability, and ongoing research and development efforts.

Underscoring any optimism about AI’s future, though, are concerns about letting AI tools continue to advance unchecked. As AI becomes more prominent in new areas of our lives, it may come with both benefits and potential harms.

There is one thing we know for sure: The generative AI age is just beginning, and we’re lucky to get to witness it firsthand.

Get Content Delivered Straight to Your Inbox

Subscribe to our blog and receive great content just like this delivered straight to your inbox.

[ad_2]

Source link